I am currently a master student in the BCI&ML Laboratory at Huazhong University of Science and Technology (HUST), advised by Prof. Dongrui Wu. Before that, I obtained my B.Eng. from the School of Optical and Electronic Information at HUST, advised by Prof. Danhua Cao.

I have a wide range of interests in Artificial Intelligence (AI), including Brain-Computer Interfaces (BCIs), AI Security and some fundamental problems in Deep Learning. Now I am working on the geometric analysis of DNN's prediction landscape, through which I hope to explore the generalization and memorization of DNNs. My ultimate goal is to understand the basic rules of our brain through AI research, and, if I am lucky enough, capture a glimmer of hope to construct AGI.

Generalization of Deep Learning

Optimization Variance: Exploring Generalization Properties of DNNs

Xiao Zhang, Dongrui Wu, Haoyi Xiong, Bo Dai

Work in Progress

[PDF]

[OpenReview]

[bib]

[code]

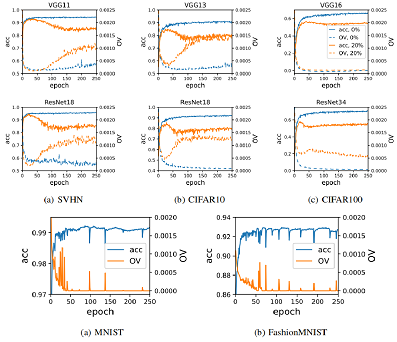

Inspired by the bias-variance decomposition, we propose a novel metric called optimization variance to measure the diversity of model updates caused by the stochastic gradients of random training batches drawn in the same iteration. This metric can be estimated using samples from the training set only but correlates well with the test error. The proposed optimization variance can be used to predict the generalization ability of a DNN, and hence early stopping can be achieved without using any validation set.

Rethink the Connections among Generalization, Memorization and the Spectral Bias of DNNs

Xiao Zhang, Dongrui Wu, Haoyi Xiong

IJCAI 2021

[PDF]

[arXiv]

[bib]

[code]

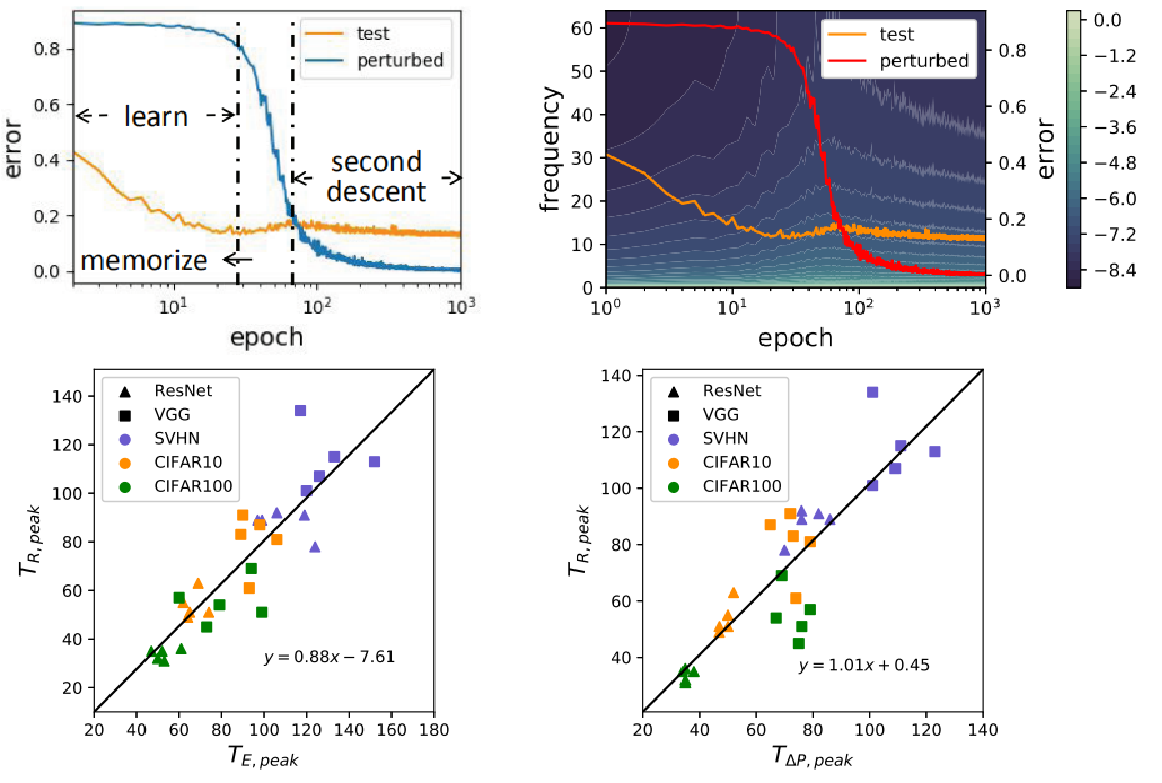

We show that under the experimental setup of deep double descent, the high-frequency components of DNNs begin to diminish in the second descent, whereas the examples with random labels are still being memorized. Moreover, we find that the spectrum of DNNs can be applied to monitoring the test behavior, even though the spectrum is calculated from the training set only.

Empirical Studies on the Properties of Linear Regions in Deep Neural Networks

Xiao Zhang, Dongrui Wu

ICLR 2020

[PDF]

[OpenReview]

[arXiv]

[bib]

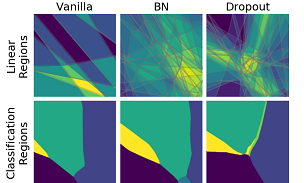

We provide a novel and meticulous perspective to look into DNNs: Instead of just counting the number of the linear regions, we study their local properties, such as the inspheres, the directions of the corresponding hyperplanes, the decision boundaries, and the relevance of the surrounding regions. We empirically observed that different optimization techniques lead to completely different linear regions, even though they result in similar classification accuracies.

Security in Brain-Computer Interfaces

Tiny Noise, Big Mistakes: Adversarial Perturbations Induce Errors in Brain-Computer Interface Spellers

Xiao Zhang, Dongrui Wu, Lieyun Ding, Hanbin Luo, Chin-Teng Lin, Tzyy-Ping Jung, Ricardo Chavarriaga

NSR 2020

[PDF]

[appendix]

[NSR]

[arXiv]

[bib]

[code]

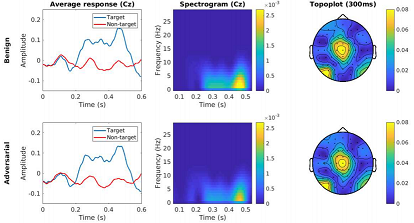

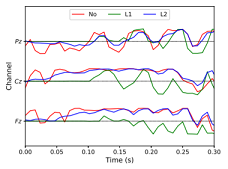

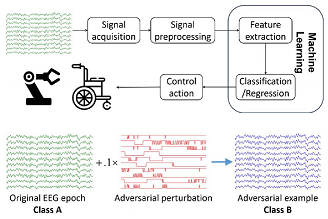

We show that P300 and steady-state visual evoked potential BCI spellers can be severely attacked by adversarial perturbations, which can mislead the spellers to spell anything the attacker wants. What distinguishes the attack approaches in this article most from previous ones is that this article explicitly considers the causality in designing the perturbations; More importantly, we attack the SSVEP speller, which even has no fixed model.

Universal Adversarial Perturbations for CNN Classifiers in EEG-Based BCIs

Zihan Liu*, Xiao Zhang*, Lubin Meng, Dongrui Wu

TNSRE 2020 (Submitted)

[arXiv]

[bib]

[code]

We proposes a novel total loss minimization (TLM) approach to generate target universal adversarial perturbations (UAPs) for EEG-based BCIs. Our approach shows better attack performance and less perturbation than the previous DeepFool-based UAP.

On the Vulnerability of CNN Classifiers in EEG-Based BCIs

Xiao Zhang, Dongrui Wu

TNSRE 2019

[PDF]

[arXiv]

[bib]

We investigate the vulnerability of CNN classifiers in EEG-based BCI systems. We generate adversarial examples by injecting a jamming module before a CNN classifier to fool it. Three scenarios – white-box attack, gray-box attack, and black-box attack – were considered, and separate attack strategies were proposed for each of them. Experiments on three EEG datasets and three CNN classifiers demonstrated the vulnerability of CNN classifiers in EEG-based BCIs.

脑机接口社区 - 华中科技大学研究团队揭示了基于EEG的脑机接口中的安全性问题, 2020

中国科学杂志 - 用脑机接口意念打字、控制轮椅?小心被黑客劫持!, 2020

EurekAlert - Are brain-computer interface spellers secure?, 2020

Tech Xplore - Study unveils security vulnerabilities in EEG-based brain-computer interfaces, 2020

HUST - 1st Place - China Brain-Computer Interface Competition, 2019

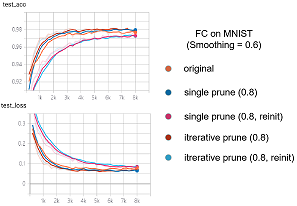

Lottery Ticket Hypothesis for DNNs [code (python)]

This project provides an easy-to-use interface for searching the lottery ticket for pytorch models.

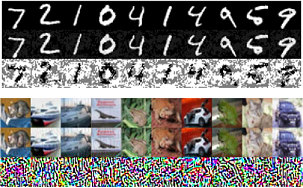

Keras Adversary [code (python)]

This is a toolbox to provide an easy-to-use interface to construct adversarial examples of Keras models.

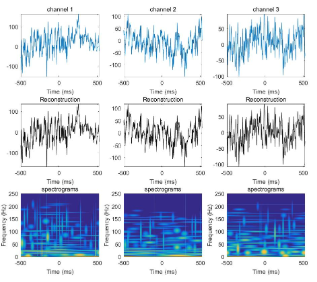

Analyzing Local Field Potential [code (Matlab)]

This project is mainly about analyzing Local Field Potential (LFP). The data comes from our collaborator Dr. Xiaomo Chen, collected from two male rhesus monkeys which were trained to perform an oculomotor gambling task. We analyzed the features on the spectrum of LFPs to decide which part of spectrum is responsible for the tasks.

National Scholarship for Postgraduates, 2020

Goodix Scholarship for Technology, 2020

National Scholarship for Postgraduates, 2019

1st Place - China Brain-Computer Interface Competition, 2019

"Outstanding Graduate" of HUST, 2018

"Honor College Student" of Qiming College of HUST, 2018

2nd Place - The 7th Mathematics Competition of Chinese College Students, 2015

National Encouragement Scholarship, 2015